SWE-Lego: Pushing the Limits of Supervised Fine-tuning for Software Issue Resolving

🤗 HF Dataset • 🤗 SWE-Lego-Qwen3-8B/32B • 🧑💻 Code • 📖 Paper

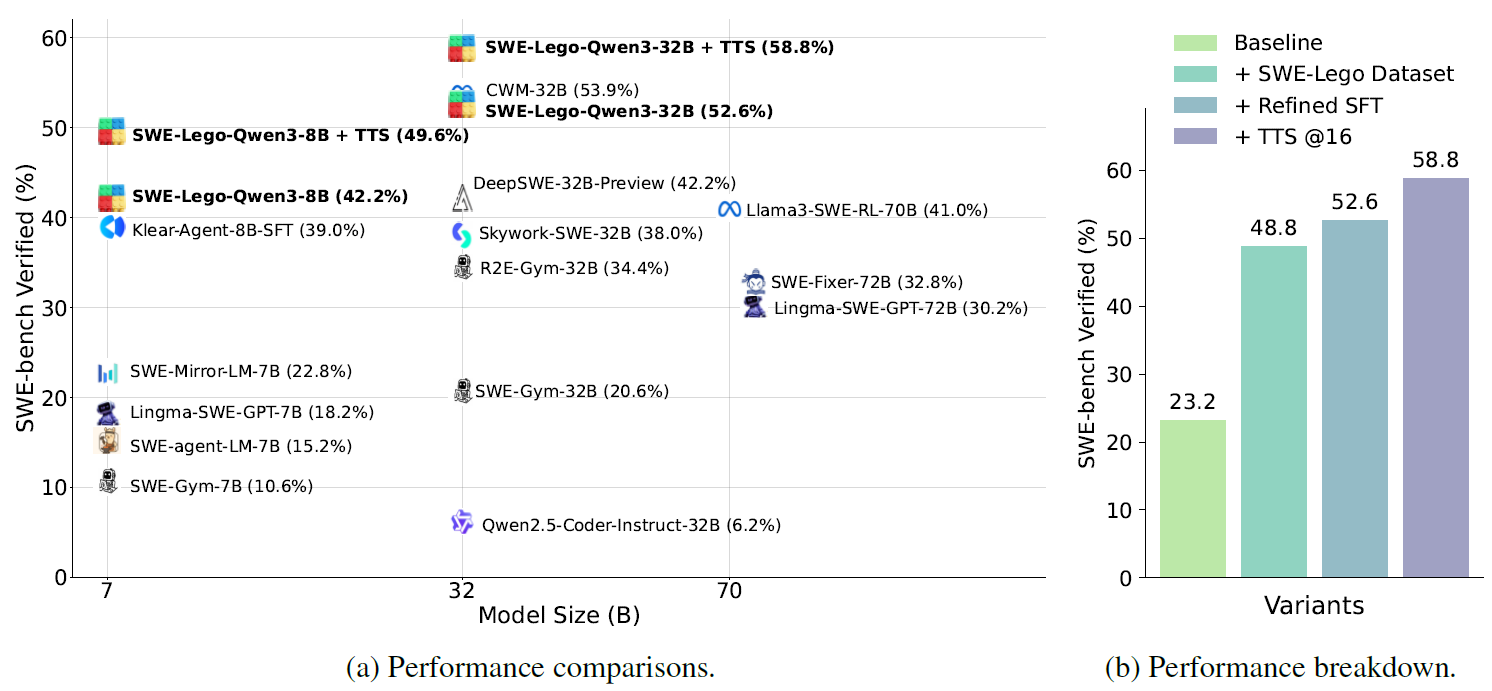

We present SWE-Lego, a supervised fine-tuning (SFT) recipe designed to achieve state-of-the-art performance in software engineering (SWE) issue resolving. SWE-Lego comprises three core building blocks:

- the SWE-Lego dataset, a collection of 32k highquality task instances and 18k validated trajectories, combining real and synthetic data to complement each other in both quality and quantity;

- a refined SFT procedure with error masking and a difficulty-based curriculum, which demonstrably improves action quality and overall performance;

- a well-trained verifier for improving test-time scaling (TTS).

Our fine-tuned models are trained exclusively with SFT from Qwen3-8B and Qwen3-32B. Their effectiveness is demonstrated on SWE-Bench-Verified:

- SWE-Lego-Qwen3-8B: 42.2% Pass@1, 49.6% TTS@16

- SWE-Lego-Qwen3-32B: 52.6% Pass@1, 58.8% TTS@16

We’ve open-sourced everything—our dataset, code, and training scripts, for everyone to progress on scaling and improving software engineering agents.

Reproduction Guide 🎯

1. 📦 Installation

git clone https://github.com/SWE-Lego/SWE-Lego.git

1.1 Installing vllm environment

conda create -n vllm python=3.12 -y

conda activate vllm

pip install vllm

1.2 Installing openhands environment

You can refer to the Development Guide from Openhands.

cd SWE-Lego/OpenHands-0.53.0

conda create -n openhands python=3.12 -y

conda activate openhands

conda install -c conda-forge nodejs=24.4.1

conda install -c conda-forge poetry=2.1.4

pip install python-dateutil==2.9.0.post0

poetry run pip install datasets

make build

1.3 Installing swebench environment

cd SWE-Lego/SWE-bench-4.0.4

conda create -n swebench python=3.12 -y

conda activate swebench

pip install -e .

1.4 Installing llamafactory environment

cd SWE-Lego/LLaMA-Factory-0.9.4.dev0

conda create -n lf python=3.12 -y

conda activate lf

pip install torch==2.8.0 torchvision==0.23.0 torchaudio==2.8.0 --index-url https://download.pytorch.org/whl/cu128

pip install -e ".[torch,metrics,deepspeed,liger-kernel]" --no-build-isolation

# install flash-attn

wget https://github.com/Dao-AILab/flash-attention/releases/download/v2.8.3/flash_attn-2.8.3+cu12torch2.8cxx11abiFALSE-cp312-cp312-linux_x86_64.whl

pip install flash_attn-2.8.3+cu12torch2.8cxx11abiFALSE-cp312-cp312-linux_x86_64.whl

pip install wandb

🤖 2. Inference and Evaluation of SWE-Lego-Qwen3-8B/32B

We take the SWE-Lego-Qwen3-32B for an example.

2.1 Serving the model via vllm

bash scripts/swe_lego_qwen3_32b/serve_vllm.sh

2.2 Running inference via openhands

bash scripts/swe_lego_qwen3_32b/infer.sh

2.3 Running evaluation via swebench

bash scripts/swe_lego_qwen3_32b/eval.sh

🔥 3. Training of SWE-Lego-Qwen3-8B/32B

3.1 Downloading trajectories for SFT from Hugging Face

Save the downloaded trajectories to LLaMA-Factory-0.9.4.dev0/data

import json

from datasets import load_dataset

datasets = [

{

"name": "SWE-Lego/SWE-Lego-Real-Data",

"filename": "swe_lego_real_data_resolved_trajectories.json"

},

{

"name": "SWE-Lego/SWE-Lego-Synthetic-Data",

"filename": "swe_lego_synthetic_data_resolved_trajectories.json"

}

]

for config in datasets:

ds = load_dataset(config["name"], split="resolved")

processed_ds = ds.select_columns(["instance_id", "messages"])

data_list = processed_ds.to_list()

with open(config["filename"], "w", encoding="utf-8") as f:

json.dump(data_list, f, ensure_ascii=False, indent=4)

print(f"Saved {len(data_list)} records to {config['filename']}")

3.2 Running SFT via llamafactory

bash scripts/swe_lego_qwen3_8b/sft.sh

bash scripts/swe_lego_qwen3_32b/sft.sh

Acknowledgements

This project acknowledges the valuable contributions of the following open-source repositories:

- SWE-bench: https://github.com/princeton-nlp/SWE-bench - A benchmark for evaluating AI agents' software engineering capabilities, which informed the evaluation design of this project.

- OpenHands: https://github.com/All-Hands-AI/OpenHands - Provides core logic for AI agent interaction and runtime management, referenced in this project's execution modules.

- LLaMA Factory: https://github.com/hiyouga/LLaMA-Factory - Offers LLM fine-tuning and prompt processing utilities, used to enhance this project's language model interaction capabilities.

Citation 📝

Please cite our paper if you find the repo helpful in your work:

@misc{swelego,

title={SWE-Lego: Pushing the Limits of Supervised Fine-tuning for Software Issue Resolving},

author={Chaofan Tao and Jierun Chen and Yuxin Jiang and Kaiqi Kou and Shaowei Wang and Ruoyu Wang and Xiaohui Li and Sidi Yang and Yiming Du and Jianbo Dai and Zhiming Mao and Xinyu Wang and Lifeng Shang and Haoli Bai},

year={2026},

eprint={2601.01426},

archivePrefix={arXiv},

primaryClass={cs.SE},

url={https://arxiv.org/abs/2601.01426},

}

- Downloads last month

- 410